Virtual Play (2017) is the second part of the XR Play Trilogy, a practice-as-research investigation speaheaded by Dr. Neill O'Dwyer (producer and scenographer). It was produced under the remit of V-SENSE (lead by (PI) Aljosa Smolic) where O'Dwyer was director of creative experiments, and it was directed by Nicholas Johnson (Dept. of Drama, TCD).

It is a reinterpretation of Beckett's Play (1963), with a view to engaging a 21st Century viewership that is increasingly accessing content via virtual reality (VR) technologies. It is V-SENSE’s inaugural creative arts/cultural project, under the creative technologies remit. This project has been conceived in order to demonstrate how VR content can be produced both cheaply and expertly, thereby challenging the notion that sophisticated VR content is exclusively the domain of wealthy institutes and production houses. The core technology enabling this novel type of creative production, i.e. VR/AR content creation based on 3D volumetric video techniques, has been developed by V-SENSE researchers David Monaghan, Jan Ondrej, Konstantinos Amplianitis and Rafael Pagés who subsequently spun out an innovative company VR production company called Volograms.

Under the guidance of Néill O’Dwyer (Producer), this virtual reality response to Play attempts to push the limits of possibility in consumable video and film by eliciting the new power of digital interactive technologies, in order to respond to Samuel Beckett’s deep engagement with the stage technologies of his day.

A central goal of the project is to address ongoing concerns in the creative cultural sector, regarding how to address the question of narrative progression in an interactive immersive environment. It is believed that by placing the viewer (audience) at the centre of the storytelling process, they are more appropriately assimilated to the virtual world and are henceforth empowered to explore, discover and decode the story, as opposed to passively watching and listening. This is something that has been effectively harnessed by the gaming sector using procedural graphics and animation, but film and video have struggled to engage this problem effectively, using audio-visual capture techniques. As such, this project attempts to investigate these new narrative possibilities for interactive, immersive environments.

In order to investigate this problem, V-SENSE drafted in the expertise of Samuel Beckett scholar Nicholas Johnson (Director). By joining the project, Nick brings with him a wealth of knowledge in relation to the complexities, technicalities and nuances of staging Beckett productions. The project is complementary to his ongoing work with the Samuel Beckett Laboratory and forthcoming research project Intermedial Beckett, and will feed into research questions in contemporary Beckett Studies and the methodologies of interdisciplinary practice-as-research. Three professional actors – Colm Gleeson, Caitlin Scott and Maeve O’Mahony – round out the Drama team. All three are trusted collaborators of Johnson and have experience with Beckett texts in performance. A high degree of precision from the actors is crucial to the success of the project, because of the difficulty in post producing video footage captured on multiple devices.

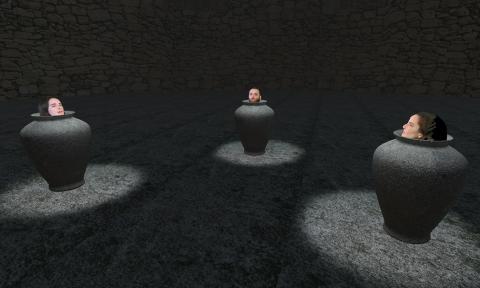

In terms of the mise en scène, the strategy consists in constructing a 3D re-interpretation of Beckett’s scene and characters, which he describes as ‘lost to age and aspect’ (Beckett, 1963), using bespoke volumetric video techniques for capturing live action. The actors are recorded against a green screen, using a multiple camera setup. Their foreground masks are extracted from the background using segmentation techniques. These masks are combined to create a dynamic photo-realistic 3D reconstruction of every actor in the scene. These reconstructions are then imported into a game engine and combined with virtual set elements, in order to create the immersive VR experience. The game engine software is also used to implement the rules and conditions that define the user interaction and behaviour.

In order to help embellish the immersive nature of the scene, V-SENSE have also drawn in collaboration from Enda Bates (Sound Designer), a lecturer on the Music Media Technologies (MMT) masters programme, in the Department of Electrical and Electronic Engineering. Enda’s work with the Spatial Audio Research Group in Trinity and the ongoing Trinity360 project concerns the use and production of spatial audio using 6 degrees of freedom (6DoF) for Virtual Reality, Augmented Reality and 360 Video. Enda deploys Ambisonic audio and spatial audio SDKs for game engines in order to give the user a perception of depth, distance and audio directivity in the virtual world. The implementation of the audio for viewing volumetric video is the main focus of Enda’s contribution to the project. He achieves this by synthesizing directivity patterns relating to the locations of the viewer and characters within the virtual set design.

The project is an important milestone for V-SENSE, because it is the inaugural artistic-cultural experiment under the creative technologies remit, as defined by Prof. Aljosa Smolic (PI) in his procurement of funding from Science Foundation of Ireland (SFI). It represents a significant effort within the research group, by drawing together discrete areas of computer science research, and in the college as a whole, because it engenders interdisciplinary collaboration across the departments of Computer Science, Drama and Electrical and Electronic Engineering.